Unlocking Efficiency: What is Dynamic Programming and How Does It Work?

In the realm of computer science and algorithm design, efficiency is paramount. When faced with complex problems that seem computationally intractable, dynamic programming emerges as a powerful technique to break them down into manageable subproblems. But what is dynamic programming, and why is it so crucial for optimizing solutions across various domains? This article delves into the core principles of dynamic programming, exploring its methodologies, applications, and advantages. We’ll examine how it elegantly tackles problems by storing and reusing solutions to overlapping subproblems, thereby avoiding redundant computations and drastically improving performance.

Understanding the Core Concepts

At its heart, dynamic programming is an algorithmic paradigm that optimizes problem-solving by dividing a complex problem into smaller, overlapping subproblems, solving each subproblem only once, and storing the solutions in a table (often called a memoization table) to avoid recomputation. This approach stands in contrast to naive recursive solutions, which can lead to exponential time complexity due to repeated calculations of the same subproblems. To fully grasp what is dynamic programming, it’s essential to understand the two key properties that a problem must possess to be suitable for this technique:

- Optimal Substructure: A problem exhibits optimal substructure if an optimal solution to the overall problem can be constructed from optimal solutions to its subproblems. This implies that the best way to solve the entire problem involves combining the best solutions to its constituent parts.

- Overlapping Subproblems: The problem involves solving the same subproblems multiple times. This is where dynamic programming truly shines, as it stores the solutions to these subproblems and reuses them whenever needed, avoiding redundant computation.

Two Main Approaches: Top-Down (Memoization) and Bottom-Up (Tabulation)

When applying dynamic programming, two primary approaches can be employed: top-down (memoization) and bottom-up (tabulation). Each method has its own strengths and is suitable for different scenarios.

Top-Down (Memoization)

The top-down approach, also known as memoization, involves breaking the problem down into smaller subproblems and recursively solving them. However, unlike a naive recursive solution, memoization stores the results of each subproblem in a table (usually a hash map or an array). Before solving a subproblem, the algorithm checks if the solution is already stored in the table. If it is, the stored solution is returned directly; otherwise, the subproblem is solved, and the solution is stored in the table for future use. This approach is often more intuitive and easier to implement, as it closely mirrors the recursive structure of the problem.

Bottom-Up (Tabulation)

The bottom-up approach, or tabulation, takes a different tack. It starts by solving the smallest subproblems and gradually builds up to the larger ones. The solutions to the subproblems are stored in a table, and each entry in the table is computed based on the solutions to previously solved subproblems. This approach typically involves iterating through the table in a specific order, ensuring that all necessary subproblems are solved before they are needed. The bottom-up approach can be more efficient than memoization in some cases, as it avoids the overhead of recursive function calls.

Illustrative Examples of Dynamic Programming

To solidify your understanding of what is dynamic programming, let’s explore some classic examples where this technique proves invaluable:

Fibonacci Sequence

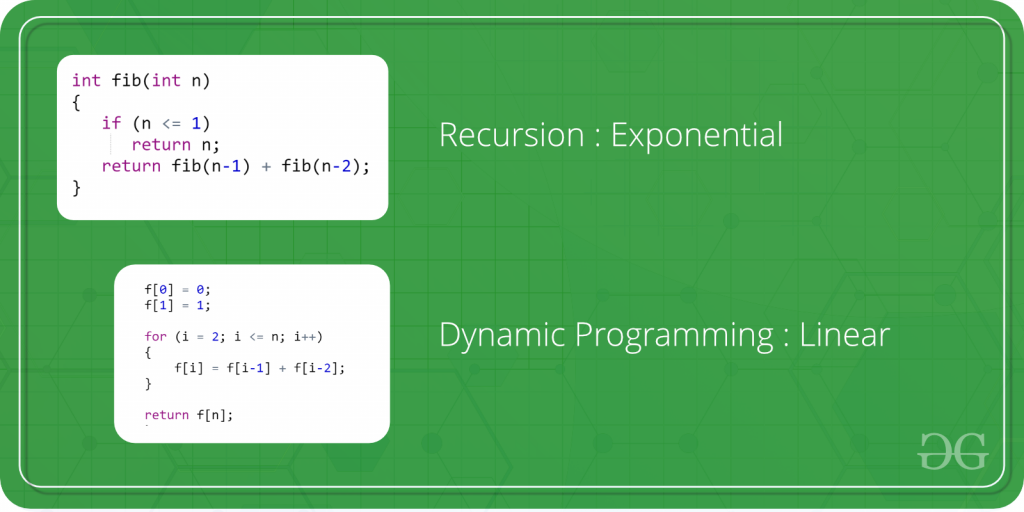

The Fibonacci sequence (0, 1, 1, 2, 3, 5, 8, …) is a classic example used to illustrate dynamic programming. The nth Fibonacci number is defined as the sum of the (n-1)th and (n-2)th Fibonacci numbers. A naive recursive implementation would repeatedly compute the same Fibonacci numbers, leading to exponential time complexity. Dynamic programming, using either memoization or tabulation, can reduce the time complexity to linear time.

Knapsack Problem

The knapsack problem involves selecting a subset of items, each with a weight and a value, to maximize the total value while staying within a given weight limit. This is a classic optimization problem that can be efficiently solved using dynamic programming. The algorithm builds a table representing the maximum value that can be obtained for each possible weight limit, considering each item in turn.

Longest Common Subsequence (LCS)

Given two sequences, the longest common subsequence problem aims to find the longest sequence that is a subsequence of both input sequences. This problem has applications in bioinformatics, text editing, and data compression. Dynamic programming can be used to build a table representing the length of the longest common subsequence for each pair of prefixes of the input sequences.

Edit Distance (Levenshtein Distance)

The edit distance between two strings measures the minimum number of operations (insertions, deletions, or substitutions) required to transform one string into the other. This problem is commonly used in spell checking and DNA sequencing. Dynamic programming can be used to build a table representing the edit distance between each pair of prefixes of the input strings.

Advantages of Dynamic Programming

The benefits of employing dynamic programming are manifold, particularly when dealing with problems exhibiting optimal substructure and overlapping subproblems:

- Efficiency: By storing and reusing solutions to subproblems, dynamic programming avoids redundant computations, leading to significant performance improvements, often reducing exponential time complexity to polynomial time complexity.

- Optimality: Dynamic programming guarantees finding the optimal solution to the problem, as it systematically explores all possible solutions and selects the best one.

- Clarity: While the initial implementation may require careful thought, the resulting code is often more structured and easier to understand compared to naive recursive solutions.

- Wide Applicability: Dynamic programming can be applied to a wide range of problems in various domains, including computer science, engineering, economics, and operations research.

Disadvantages and Considerations

Despite its many advantages, dynamic programming also has some limitations that should be considered:

- Space Complexity: Dynamic programming typically requires storing solutions to subproblems in a table, which can consume significant memory, especially for large problem instances.

- Complexity of Implementation: Implementing dynamic programming solutions can be challenging, requiring careful consideration of the order in which subproblems are solved and the structure of the memoization table.

- Suitability: Dynamic programming is not suitable for all problems. It is most effective when the problem exhibits optimal substructure and overlapping subproblems.

When to Use Dynamic Programming

Dynamic programming is most suitable for problems that meet the following criteria:

- The problem can be broken down into smaller, overlapping subproblems.

- An optimal solution to the overall problem can be constructed from optimal solutions to its subproblems (optimal substructure).

- The same subproblems are solved multiple times.

If a problem meets these criteria, dynamic programming can be a powerful tool for optimizing its solution. Understanding what is dynamic programming is crucial for any aspiring computer scientist or software engineer.

Conclusion

In conclusion, what is dynamic programming? It’s a powerful algorithmic technique that leverages optimal substructure and overlapping subproblems to efficiently solve complex problems. By storing and reusing solutions to subproblems, dynamic programming avoids redundant computations and guarantees finding the optimal solution. While it has its limitations, dynamic programming remains a fundamental tool in the arsenal of any computer scientist or software engineer. Mastering this technique can unlock significant performance improvements and enable the solution of previously intractable problems. Understanding the principles and applications of dynamic programming is essential for tackling a wide range of challenges in various domains. This approach, whether implemented through memoization or tabulation, provides a structured and efficient way to approach optimization problems, making it a cornerstone of algorithm design.

Further exploration of specific dynamic programming problems and their implementations can provide a deeper understanding of this technique. [See also: Understanding Algorithmic Complexity] As you delve further into the world of algorithms, remember that dynamic programming is a versatile and valuable tool that can help you solve complex problems efficiently and effectively. The ability to recognize and apply dynamic programming is a hallmark of a skilled problem solver, and mastering this technique will undoubtedly enhance your capabilities as a computer scientist or software engineer. Knowing what is dynamic programming is just the first step; the real power lies in applying it creatively to solve real-world problems.