Unlocking Efficiency: A Comprehensive Guide to Dynamic Programming

In the realm of computer science and algorithm design, efficiency reigns supreme. Among the various techniques employed to optimize solutions, dynamic programming stands out as a powerful and versatile approach. This article delves into the core principles of dynamic programming, exploring its methodologies, applications, and advantages. We aim to provide a clear and concise understanding of this essential algorithmic paradigm, suitable for both beginners and experienced programmers.

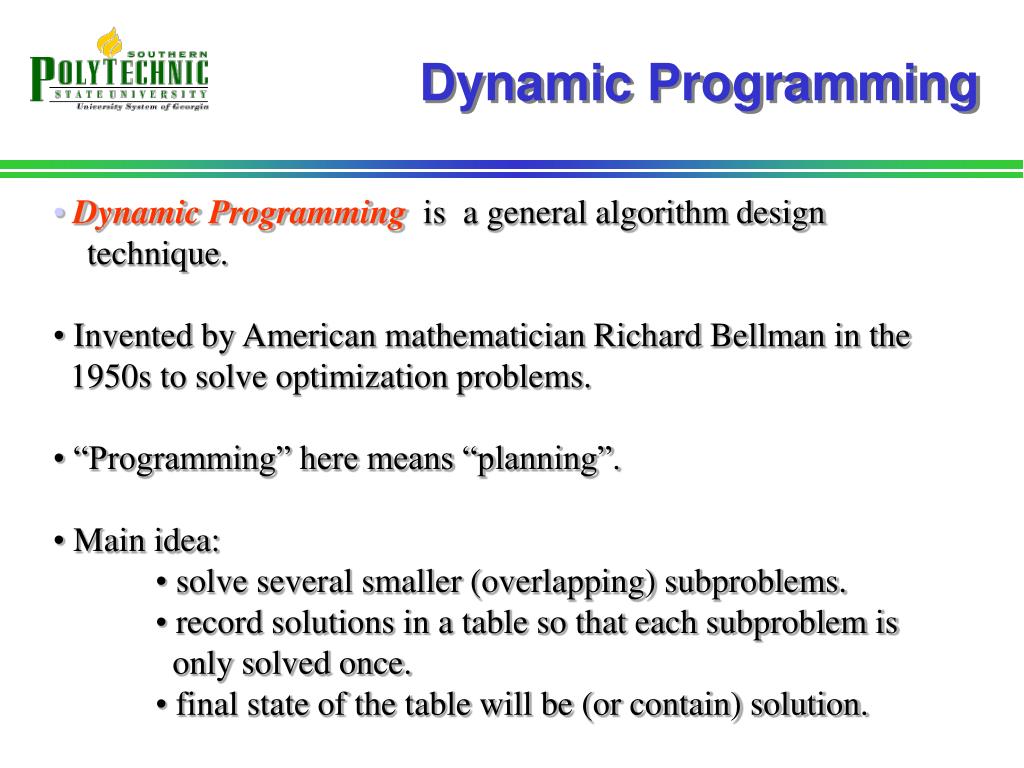

What is Dynamic Programming?

Dynamic programming is an algorithmic technique that solves complex problems by breaking them down into smaller overlapping subproblems. Unlike divide-and-conquer approaches, which solve subproblems independently, dynamic programming solves each subproblem only once and stores its solution. This avoids redundant computations and significantly improves efficiency, especially for problems with a high degree of overlapping subproblems. The core idea is to build up solutions to larger problems from the solutions to smaller ones, leveraging the principle of optimality.

Essentially, dynamic programming converts an exponential-time solution into a polynomial-time solution, dramatically enhancing performance for certain classes of problems. It achieves this by systematically exploring all possible subproblems and storing their results in a table or memoization structure.

Key Concepts of Dynamic Programming

Understanding the fundamental concepts is crucial for effectively applying dynamic programming. These concepts include:

- Optimal Substructure: A problem exhibits optimal substructure if an optimal solution to the problem contains optimal solutions to its subproblems. This means that the optimal solution can be constructed from the optimal solutions of its constituent subproblems.

- Overlapping Subproblems: A problem has overlapping subproblems if the same subproblems are encountered multiple times during the recursive solution. Dynamic programming exploits this property by solving each subproblem only once and storing the result for future use.

- Memoization: This is a top-down approach where we store the results of expensive function calls and return the cached result when the same inputs occur again. It essentially remembers the solutions to subproblems to avoid recomputation.

- Tabulation: This is a bottom-up approach where we systematically fill in a table of solutions to subproblems, starting with the smallest subproblems and working our way up to the larger ones.

Two Approaches to Dynamic Programming

There are primarily two approaches to implementing dynamic programming:

Top-Down with Memoization

The top-down approach uses recursion to break down the problem into subproblems. However, instead of recomputing the solutions to overlapping subproblems, it stores them in a memoization table (usually a dictionary or array). When the function encounters a subproblem it has already solved, it simply retrieves the solution from the table instead of recomputing it. This approach is often more intuitive and easier to implement, especially for problems that are naturally expressed recursively.

For example, consider calculating the nth Fibonacci number. A naive recursive implementation would repeatedly compute the same Fibonacci numbers multiple times. With memoization, we store the calculated Fibonacci numbers in a table, avoiding redundant computations.

Bottom-Up with Tabulation

The bottom-up approach starts by solving the smallest subproblems and then uses their solutions to build up the solutions to larger subproblems. It typically involves filling in a table of solutions in a systematic order. This approach is often more efficient than the top-down approach because it avoids the overhead of recursion. However, it can be less intuitive and require more careful planning to determine the correct order for filling in the table.

Using the Fibonacci example again, the bottom-up approach would start by calculating F(0) and F(1), then use these values to calculate F(2), F(3), and so on, until it reaches F(n). The calculated values are stored in a table, allowing for efficient access.

Applications of Dynamic Programming

Dynamic programming has a wide range of applications in various fields, including:

- Computer Science: Algorithm design, compiler optimization, string matching, graph algorithms.

- Operations Research: Inventory management, resource allocation, scheduling.

- Bioinformatics: Sequence alignment, protein folding.

- Economics: Portfolio optimization, game theory.

Here are a few specific examples:

- Knapsack Problem: Determining the most valuable subset of items to include in a knapsack with a limited capacity.

- Longest Common Subsequence: Finding the longest sequence of characters that is common to two or more strings.

- Shortest Path Algorithms: Finding the shortest path between two nodes in a graph (e.g., Dijkstra’s algorithm, Bellman-Ford algorithm). [See also: Dijkstra’s Algorithm Explained]

- Edit Distance: Calculating the minimum number of edits (insertions, deletions, substitutions) required to transform one string into another.

- Matrix Chain Multiplication: Determining the optimal order to multiply a chain of matrices to minimize the number of scalar multiplications.

Advantages and Disadvantages of Dynamic Programming

Like any algorithmic technique, dynamic programming has its own set of advantages and disadvantages:

Advantages

- Efficiency: Significantly reduces the time complexity of certain problems by avoiding redundant computations.

- Optimality: Guarantees finding the optimal solution for problems with optimal substructure.

- Versatility: Applicable to a wide range of problems in various domains.

Disadvantages

- Space Complexity: Can require significant memory to store the solutions to subproblems.

- Complexity: Can be challenging to design and implement, especially for complex problems.

- Applicability: Not suitable for all problems; only applicable to problems with optimal substructure and overlapping subproblems.

When to Use Dynamic Programming

Dynamic programming is most effective when the problem exhibits the following characteristics:

- Optimal Substructure: The optimal solution to the problem can be constructed from the optimal solutions of its subproblems.

- Overlapping Subproblems: The same subproblems are encountered multiple times during the recursive solution.

If a problem lacks these characteristics, other algorithmic techniques, such as divide-and-conquer or greedy algorithms, may be more appropriate.

Example: Calculating the nth Fibonacci Number

Let’s illustrate dynamic programming with a classic example: calculating the nth Fibonacci number. The Fibonacci sequence is defined as follows:

- F(0) = 0

- F(1) = 1

- F(n) = F(n-1) + F(n-2) for n > 1

Top-Down with Memoization (Python)

“`python

def fibonacci_memoization(n, memo={}):

if n in memo:

return memo[n]

if n <= 1:

return n

memo[n] = fibonacci_memoization(n-1, memo) + fibonacci_memoization(n-2, memo)

return memo[n]

# Example usage

n = 10

print(f"The {n}th Fibonacci number is: {fibonacci_memoization(n)}")

“`

Bottom-Up with Tabulation (Python)

“`python

def fibonacci_tabulation(n):

table = [0] * (n + 1)

table[0] = 0

table[1] = 1

for i in range(2, n + 1):

table[i] = table[i-1] + table[i-2]

return table[n]

# Example usage

n = 10

print(f”The {n}th Fibonacci number is: {fibonacci_tabulation(n)}”)

“`

Both the memoization and tabulation approaches significantly improve the efficiency of calculating Fibonacci numbers compared to a naive recursive implementation.

Conclusion

Dynamic programming is a powerful algorithmic technique that can significantly improve the efficiency of solving complex problems. By breaking down problems into smaller overlapping subproblems and storing their solutions, it avoids redundant computations and guarantees finding the optimal solution for problems with optimal substructure. While it can be challenging to design and implement, the benefits of dynamic programming in terms of performance make it an essential tool for any programmer or computer scientist. Understanding the core concepts and practicing its application will undoubtedly enhance your problem-solving skills and ability to tackle complex challenges. The key is to identify problems with overlapping subproblems and optimal substructure, then choose the appropriate approach (memoization or tabulation) to implement an efficient solution. Dynamic programming is a cornerstone of algorithm design, and mastering it unlocks a higher level of problem-solving capability. Further exploration into specific problem types and advanced dynamic programming techniques will continue to build upon this foundation. Remember to analyze the problem thoroughly, identify the overlapping subproblems, and define the optimal substructure before attempting to implement a dynamic programming solution. With practice and understanding, dynamic programming can become a valuable asset in your algorithmic toolbox.