Unlocking Conversational AI: A Deep Dive into RAG Chatbot Architecture

In the rapidly evolving landscape of Artificial Intelligence (AI), chatbots have emerged as a pivotal tool for businesses seeking to enhance customer engagement, streamline operations, and deliver personalized experiences. Among the various chatbot architectures, Retrieval-Augmented Generation (RAG) stands out as a particularly promising approach. This article provides a comprehensive exploration of RAG chatbot architecture, dissecting its components, advantages, challenges, and real-world applications. We will delve into how RAG combines the strengths of information retrieval and text generation to create chatbots that are not only conversational but also knowledgeable and contextually relevant.

Understanding the Core Principles of RAG Chatbots

RAG chatbot architecture represents a paradigm shift in how chatbots are designed and deployed. Unlike traditional chatbots that rely solely on pre-defined scripts or trained models, RAG chatbots leverage an external knowledge base to augment their responses. This approach allows them to access a vast repository of information, enabling them to provide more accurate, comprehensive, and nuanced answers to user queries. The core principle behind RAG chatbot architecture is to retrieve relevant information from the knowledge base and use it to inform the generation of a response. This involves two primary stages: retrieval and generation.

The Retrieval Stage

The retrieval stage is responsible for identifying and extracting the most relevant information from the external knowledge base in response to a user’s query. This typically involves using techniques such as semantic search, keyword matching, or vector embeddings to find passages that are semantically similar to the query. The goal is to surface the information that is most likely to be helpful in answering the user’s question.

Several factors influence the effectiveness of the retrieval stage. These include the quality and organization of the knowledge base, the choice of retrieval algorithm, and the ability to handle ambiguous or complex queries. A well-designed knowledge base should be structured in a way that allows for efficient retrieval of relevant information. This may involve using techniques such as indexing, tagging, or hierarchical organization.

The Generation Stage

Once the relevant information has been retrieved, the generation stage takes over to synthesize this information and generate a coherent and informative response. This typically involves using a large language model (LLM) that has been trained to generate text based on a given context. The retrieved information serves as the context for the LLM, guiding it to generate a response that is both relevant to the user’s query and grounded in the knowledge base.

The generation stage is crucial for ensuring that the chatbot’s responses are not only accurate but also natural and engaging. A well-trained LLM can effectively integrate the retrieved information into a cohesive narrative, providing users with a seamless and informative conversational experience. The RAG chatbot architecture heavily relies on the LLM’s ability to understand context and generate human-like text.

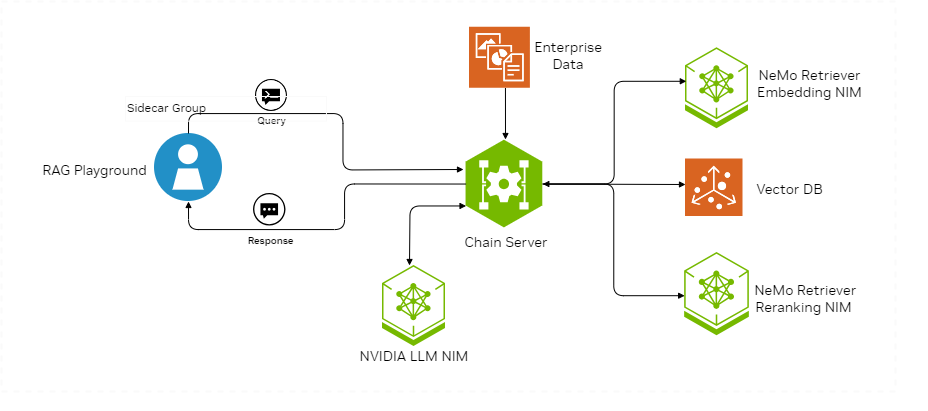

Key Components of a RAG Chatbot Architecture

A typical RAG chatbot architecture consists of several key components that work together to enable intelligent and conversational interactions. These components include:

- User Interface: The interface through which users interact with the chatbot, typically a chat window or a voice interface.

- Query Processing Module: This module is responsible for analyzing the user’s query and extracting relevant information, such as keywords and intent.

- Retrieval Module: This module searches the external knowledge base for relevant information based on the processed query.

- Knowledge Base: The external repository of information that the chatbot uses to augment its responses. This can be a collection of documents, a database, or any other structured or unstructured data source.

- Generation Module: This module uses the retrieved information to generate a coherent and informative response to the user’s query.

- Response Formatting Module: This module formats the generated response for display to the user, ensuring that it is clear, concise, and easy to understand.

Benefits of Using RAG Chatbot Architecture

RAG chatbot architecture offers several advantages over traditional chatbot architectures, making it a compelling choice for organizations seeking to build intelligent and conversational AI solutions. These benefits include:

- Improved Accuracy: By leveraging an external knowledge base, RAG chatbots can provide more accurate and reliable answers to user queries.

- Enhanced Contextual Understanding: RAG chatbots can better understand the context of a conversation, allowing them to provide more relevant and personalized responses.

- Increased Scalability: RAG chatbots can easily scale to handle a large volume of queries without requiring extensive retraining.

- Reduced Training Costs: By relying on an external knowledge base, RAG chatbots require less training data than traditional chatbots.

- Enhanced Explainability: RAG chatbots can provide users with the sources of their information, making their responses more transparent and trustworthy.

Challenges and Considerations in Implementing RAG Chatbots

While RAG chatbot architecture offers numerous benefits, it also presents several challenges that organizations must address when implementing these solutions. These challenges include:

- Knowledge Base Management: Maintaining a high-quality and up-to-date knowledge base is crucial for the success of RAG chatbots. This requires ongoing effort to curate and update the information in the knowledge base.

- Retrieval Algorithm Optimization: Choosing the right retrieval algorithm and optimizing it for the specific knowledge base is essential for ensuring that the chatbot can retrieve the most relevant information.

- Generation Model Training: Training a robust and effective generation model requires a significant amount of data and computational resources.

- Handling Ambiguous Queries: RAG chatbots must be able to handle ambiguous or complex queries that may require multiple steps to resolve.

- Ensuring Data Privacy and Security: Organizations must take steps to ensure that the data in the knowledge base is protected from unauthorized access and use.

Real-World Applications of RAG Chatbots

RAG chatbot architecture is being used in a wide range of industries and applications, including:

- Customer Service: RAG chatbots can provide customers with instant answers to their questions, reducing the need for human agents.

- Technical Support: RAG chatbots can help users troubleshoot technical issues by providing them with access to relevant documentation and knowledge base articles.

- E-commerce: RAG chatbots can help customers find products, answer questions about orders, and provide personalized recommendations.

- Healthcare: RAG chatbots can provide patients with information about their health conditions, medications, and treatment options.

- Education: RAG chatbots can help students learn new concepts, answer questions about assignments, and provide personalized feedback.

For example, consider a customer service chatbot for an online retailer. Using a RAG chatbot architecture, the chatbot can access a comprehensive knowledge base of product information, order details, and frequently asked questions. When a customer asks a question about a specific product, the chatbot can retrieve relevant information from the knowledge base and use it to generate a detailed and accurate response. This not only saves the customer time but also reduces the burden on human customer service agents.

The Future of RAG Chatbot Architecture

The future of RAG chatbot architecture is bright, with ongoing research and development focused on improving the accuracy, efficiency, and scalability of these solutions. Some of the key trends shaping the future of RAG chatbots include:

- Improved Retrieval Algorithms: Researchers are developing new retrieval algorithms that can more effectively identify relevant information from large and complex knowledge bases.

- More Powerful Generation Models: Advances in large language models are leading to more powerful and versatile generation models that can generate more natural and engaging responses.

- Integration with Other AI Technologies: RAG chatbots are increasingly being integrated with other AI technologies, such as computer vision and speech recognition, to create more comprehensive and intelligent solutions.

- Personalization and Customization: RAG chatbots are becoming more personalized and customizable, allowing organizations to tailor them to the specific needs of their users.

- Explainable AI (XAI): There’s a growing focus on making RAG chatbot responses more explainable, allowing users to understand why the chatbot provided a particular answer.

As these trends continue to evolve, RAG chatbot architecture is poised to become an even more powerful and versatile tool for organizations seeking to enhance customer engagement, streamline operations, and deliver personalized experiences. The combination of robust retrieval mechanisms and advanced generation capabilities makes RAG a compelling choice for building conversational AI solutions that are both knowledgeable and engaging.

Conclusion

RAG chatbot architecture represents a significant advancement in the field of conversational AI. By combining the strengths of information retrieval and text generation, RAG chatbots can provide users with more accurate, comprehensive, and personalized responses. While there are challenges associated with implementing RAG chatbots, the benefits they offer make them a compelling choice for organizations seeking to build intelligent and conversational AI solutions. As the technology continues to evolve, we can expect to see even more innovative and impactful applications of RAG chatbots in the years to come. Understanding the nuances of RAG chatbot architecture is crucial for anyone looking to leverage the power of AI in conversational interfaces. The RAG chatbot architecture provides a solid foundation for building intelligent and responsive chatbots. The future of chatbots lies in the continued development and refinement of architectures like RAG chatbot architecture.

[See also: Building Scalable Chatbots with Kubernetes]

[See also: The Role of NLP in Modern Chatbot Development]

[See also: Comparing Different Chatbot Frameworks]