Understanding the Architecture of a CPU: A Deep Dive

The Central Processing Unit (CPU) is the brain of any computer system. Its architecture of a CPU dictates how it processes instructions, manages data, and interacts with other components. This article delves into the intricate details of CPU architecture, exploring its key components, functionalities, and evolution over time. Understanding the architecture of a CPU is fundamental for anyone looking to optimize system performance, troubleshoot hardware issues, or simply gain a deeper appreciation for the technology that powers our digital world.

Core Components of a CPU

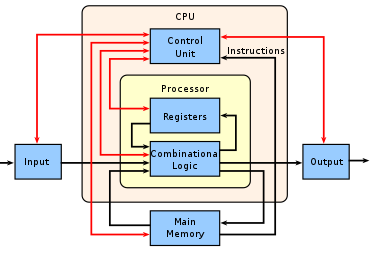

The architecture of a CPU comprises several essential components, each playing a crucial role in the execution of instructions:

- Arithmetic Logic Unit (ALU): The ALU performs arithmetic and logical operations. It’s the workhorse of the CPU, executing instructions like addition, subtraction, multiplication, division, and logical comparisons.

- Control Unit (CU): The CU fetches instructions from memory, decodes them, and coordinates the activities of other components within the CPU. It’s the conductor of the orchestra, ensuring that everything works in harmony.

- Registers: Registers are small, high-speed storage locations within the CPU used to hold data and instructions that are being actively processed. They provide quick access to frequently used data, significantly speeding up processing.

- Cache Memory: Cache memory is a small, fast memory that stores frequently accessed data and instructions. It acts as a buffer between the CPU and main memory, reducing the time it takes to retrieve information. There are typically multiple levels of cache (L1, L2, L3), each with varying sizes and speeds.

- Bus Interface Unit (BIU): The BIU manages the flow of data between the CPU and other components, such as memory and peripherals. It ensures that data is transferred efficiently and reliably.

Instruction Set Architecture (ISA)

The Instruction Set Architecture (ISA) defines the set of instructions that a CPU can execute. It acts as the interface between software and hardware, specifying the format of instructions, the types of operations that can be performed, and the addressing modes that can be used. Common ISAs include x86, ARM, and RISC-V. The choice of ISA significantly impacts the performance, power consumption, and complexity of a CPU. The architecture of a CPU is intimately tied to its ISA.

Types of ISAs

- CISC (Complex Instruction Set Computing): CISC architectures, such as x86, use a large number of complex instructions. This can simplify programming but may result in slower execution times for some instructions.

- RISC (Reduced Instruction Set Computing): RISC architectures, such as ARM, use a smaller number of simpler instructions. This can lead to faster execution times and lower power consumption but may require more instructions to perform the same task.

The Fetch-Decode-Execute Cycle

The execution of instructions in a CPU follows a fundamental cycle known as the fetch-decode-execute cycle. This cycle is repeated continuously, allowing the CPU to process a stream of instructions:

- Fetch: The CU fetches the next instruction from memory.

- Decode: The CU decodes the instruction, determining the operation to be performed and the operands involved.

- Execute: The CU signals the appropriate components (e.g., ALU, registers) to perform the operation.

This cycle is the cornerstone of how a architecture of a CPU operates, and optimizing each step is crucial for improving performance.

Pipelining and Parallel Processing

Modern CPUs employ various techniques to improve performance, including pipelining and parallel processing.

Pipelining

Pipelining allows multiple instructions to be processed simultaneously by dividing the fetch-decode-execute cycle into stages. While one instruction is being executed, another instruction can be decoded, and a third instruction can be fetched. This overlapping execution significantly increases the throughput of the CPU. This is a core feature of modern architecture of a CPU.

Parallel Processing

Parallel processing involves executing multiple instructions or tasks simultaneously using multiple processing units. This can be achieved through techniques such as:

- Multicore Processors: Multicore processors contain multiple independent CPUs on a single chip, allowing for true parallel execution.

- Multithreading: Multithreading allows a single CPU core to execute multiple threads concurrently by rapidly switching between them.

- SIMD (Single Instruction, Multiple Data): SIMD instructions allow a single instruction to operate on multiple data elements simultaneously, enabling parallel processing of data-intensive tasks.

Memory Hierarchy and Caching

The memory hierarchy is a tiered system of memory components, each with different speeds and costs. The CPU interacts with the memory hierarchy to retrieve and store data and instructions. The hierarchy typically consists of:

- Registers: The fastest and most expensive memory, located within the CPU.

- Cache Memory: A small, fast memory that stores frequently accessed data and instructions.

- Main Memory (RAM): The primary memory of the system, used to store data and instructions that are currently being used.

- Secondary Storage (Hard Drive, SSD): The slowest and cheapest memory, used to store data and instructions that are not actively being used.

Caching is a technique used to improve performance by storing frequently accessed data and instructions in cache memory. When the CPU needs to access data, it first checks the cache. If the data is found in the cache (a cache hit), it can be retrieved quickly. If the data is not found in the cache (a cache miss), it must be retrieved from main memory, which is much slower. Effective caching strategies are essential for optimizing CPU performance. The efficiency of cache utilization is a critical aspect of the architecture of a CPU.

Evolution of CPU Architecture

The architecture of a CPU has evolved dramatically over the years, driven by advancements in technology and the increasing demands of software applications. Key milestones in CPU evolution include:

- The Transistor Revolution: The invention of the transistor replaced bulky and power-hungry vacuum tubes, enabling the creation of smaller, faster, and more energy-efficient CPUs.

- The Integrated Circuit (IC): The development of the IC allowed multiple transistors and other components to be integrated onto a single chip, leading to further miniaturization and increased performance.

- Microprocessors: The creation of the microprocessor, a single-chip CPU, revolutionized computing, making it more accessible and affordable.

- Multicore Processors: The introduction of multicore processors enabled parallel processing, significantly increasing computing power.

- Modern Architectures: Contemporary CPUs feature complex architectures with advanced features such as out-of-order execution, branch prediction, and SIMD instructions, pushing the boundaries of performance.

Future Trends in CPU Architecture

The future of CPU architecture is likely to be shaped by several key trends:

- Chiplet Designs: Chiplet designs involve integrating multiple smaller chips (chiplets) into a single package, allowing for greater flexibility and scalability.

- Heterogeneous Computing: Heterogeneous computing involves integrating different types of processing units (e.g., CPUs, GPUs, FPGAs) onto a single chip, allowing for specialized processing of different types of workloads.

- Quantum Computing: Quantum computing promises to revolutionize computing by leveraging the principles of quantum mechanics to solve problems that are intractable for classical computers.

- Neuromorphic Computing: Neuromorphic computing aims to mimic the structure and function of the human brain, enabling the creation of more efficient and intelligent computing systems.

Conclusion

The architecture of a CPU is a complex and fascinating topic. Understanding its core components, functionalities, and evolution is essential for anyone interested in computer systems. From the ALU and Control Unit to pipelining and parallel processing, each aspect of CPU architecture plays a crucial role in determining the performance and capabilities of a computer. As technology continues to advance, we can expect to see further innovations in CPU architecture, pushing the boundaries of what is possible. The continuing evolution of the architecture of a CPU will undoubtedly shape the future of computing. [See also: Computer Organization and Design] [See also: Understanding CPU Performance] [See also: History of Microprocessors]