Reinforcement Learning in AI: A Comprehensive Overview

Artificial Intelligence (AI) is rapidly transforming industries, and at its core lies a variety of machine learning techniques. One of the most promising and dynamic of these is Reinforcement Learning (RL). Reinforcement Learning in AI involves training agents to make sequences of decisions in an environment to maximize a cumulative reward. This approach, inspired by behavioral psychology, differs significantly from supervised and unsupervised learning, offering unique capabilities in solving complex problems that involve sequential decision-making.

What is Reinforcement Learning?

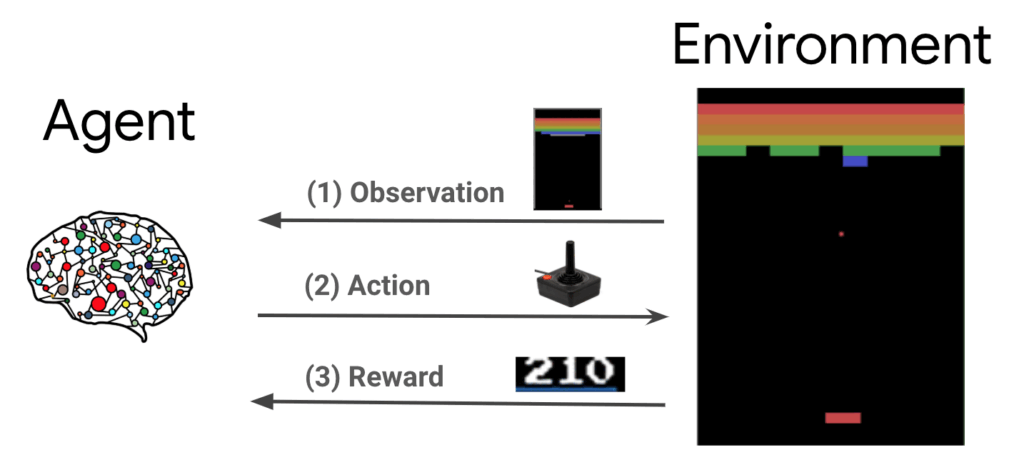

At its essence, Reinforcement Learning in AI is about learning through trial and error. An agent interacts with an environment, performing actions and receiving feedback in the form of rewards or penalties. The agent’s goal is to learn a policy – a mapping from states to actions – that maximizes the total reward it receives over time. This contrasts with supervised learning, where the agent is given labeled data to learn from, and unsupervised learning, where the agent identifies patterns in unlabeled data.

The key components of an RL system include:

- Agent: The decision-maker.

- Environment: The world the agent interacts with.

- State: The current situation the agent finds itself in.

- Action: A choice the agent can make.

- Reward: Feedback from the environment, indicating the desirability of an action.

- Policy: The strategy the agent uses to determine which action to take in each state.

The learning process in Reinforcement Learning in AI typically involves the agent exploring different actions and observing the resulting rewards. Over time, the agent refines its policy to choose actions that lead to higher cumulative rewards. This iterative process is what allows RL agents to learn optimal strategies without explicit programming.

Key Concepts in Reinforcement Learning

Several key concepts underpin the functionality of Reinforcement Learning. Understanding these is crucial for grasping how RL algorithms work and how they can be applied effectively:

Markov Decision Processes (MDPs)

Many Reinforcement Learning problems can be formulated as Markov Decision Processes (MDPs). An MDP is a mathematical framework for modeling sequential decision-making in environments where the outcome of an action depends only on the current state and the chosen action. Key properties of an MDP include:

- Markov Property: The future state depends only on the current state and action, not on the past history.

- State Space: The set of all possible states the agent can be in.

- Action Space: The set of all possible actions the agent can take.

- Transition Probabilities: The probability of transitioning from one state to another given an action.

- Reward Function: A function that defines the reward received for taking a particular action in a particular state.

Exploration vs. Exploitation

A fundamental challenge in Reinforcement Learning in AI is the trade-off between exploration and exploitation. Exploration involves trying new actions to discover potentially better strategies, while exploitation involves using the current best strategy to maximize immediate rewards. Balancing these two is essential for effective learning.

Too much exploration can lead to inefficient learning, as the agent spends too much time trying suboptimal actions. Too much exploitation can lead to the agent getting stuck in a local optimum, missing out on potentially better strategies.

Value Functions and Q-Functions

Value functions and Q-functions are used to estimate the long-term value of being in a particular state or taking a particular action in a particular state. The value function V(s) represents the expected cumulative reward starting from state s, following a particular policy. The Q-function Q(s, a) represents the expected cumulative reward starting from state s, taking action a, and then following a particular policy.

These functions are crucial for evaluating the quality of different actions and policies, and they are used in many Reinforcement Learning algorithms to guide the learning process.

Types of Reinforcement Learning Algorithms

There are several types of Reinforcement Learning algorithms, each with its own strengths and weaknesses. Here are some of the most common:

Q-Learning

Q-learning is a model-free, off-policy Reinforcement Learning algorithm. It learns the optimal Q-function, which represents the maximum expected cumulative reward for taking a particular action in a particular state, regardless of the policy being followed. Q-learning is widely used due to its simplicity and effectiveness.

SARSA (State-Action-Reward-State-Action)

SARSA is another model-free Reinforcement Learning algorithm, but it is on-policy. This means that it learns the Q-function for the policy that is currently being followed. SARSA is often used in situations where it is important to learn a safe policy, as it takes into account the potential consequences of deviating from the current policy.

Deep Q-Networks (DQN)

Deep Q-Networks (DQN) combine Q-learning with deep neural networks. This allows DQN to handle high-dimensional state spaces, such as those found in video games. DQN has been used to achieve superhuman performance in many Atari games, demonstrating the power of Reinforcement Learning in AI.

Policy Gradient Methods

Policy gradient methods directly optimize the policy without explicitly learning a value function. These methods are often used in situations where the action space is continuous or where the value function is difficult to estimate accurately. Popular policy gradient methods include REINFORCE and Actor-Critic methods.

Applications of Reinforcement Learning

Reinforcement Learning in AI has a wide range of applications across various industries. Its ability to solve complex sequential decision-making problems makes it a valuable tool in many domains.

Robotics

RL is used to train robots to perform complex tasks, such as grasping objects, navigating environments, and assembling products. By learning through trial and error, robots can adapt to changing conditions and optimize their performance. [See also: Robotics and AI Integration]

Game Playing

RL has achieved remarkable success in game playing, with agents beating human experts in games such as Go, chess, and video games. These agents learn strategies through self-play, discovering novel and effective tactics. The success of Reinforcement Learning in gaming highlights its potential for solving complex problems.

Finance

In finance, RL is used for tasks such as portfolio management, algorithmic trading, and risk management. RL agents can learn to make optimal trading decisions based on market conditions, maximizing returns while minimizing risk. The application of Reinforcement Learning in AI offers a data-driven approach to financial decision-making.

Healthcare

RL is being explored for applications in healthcare, such as personalized treatment planning and drug discovery. RL agents can learn to optimize treatment strategies based on patient data, improving outcomes and reducing costs. This application of Reinforcement Learning has the potential to revolutionize healthcare delivery.

Autonomous Vehicles

RL is used to develop autonomous vehicles that can navigate complex environments and make decisions in real-time. RL agents can learn to drive safely and efficiently, adapting to changing traffic conditions and unexpected events. Reinforcement Learning in AI is crucial for enabling truly autonomous driving.

Challenges and Future Directions

Despite its successes, Reinforcement Learning in AI still faces several challenges. One major challenge is sample efficiency, as RL algorithms often require a large amount of data to learn effectively. Another challenge is the exploration-exploitation trade-off, which can be difficult to balance in complex environments.

Future research directions in RL include:

- Improving sample efficiency: Developing algorithms that can learn effectively from limited data.

- Addressing the exploration-exploitation trade-off: Developing more sophisticated exploration strategies.

- Scaling RL to more complex problems: Developing algorithms that can handle high-dimensional state and action spaces.

- Combining RL with other machine learning techniques: Integrating RL with supervised and unsupervised learning to leverage the strengths of each approach.

As Reinforcement Learning continues to evolve, it is poised to play an increasingly important role in AI and its applications across various industries. The ongoing research and development efforts are paving the way for more robust, efficient, and versatile RL algorithms that can tackle even the most challenging problems.

Conclusion

Reinforcement Learning in AI is a powerful and versatile machine learning technique that enables agents to learn through trial and error. Its ability to solve complex sequential decision-making problems makes it a valuable tool in various domains, from robotics and game playing to finance and healthcare. While challenges remain, ongoing research and development efforts are driving significant progress, paving the way for even more impactful applications of Reinforcement Learning in the future. Understanding the principles and techniques of Reinforcement Learning is crucial for anyone interested in the future of AI.