Navigating the Labyrinth: Understanding the EU AI Laws and Their Global Impact

The European Union is on the cusp of a regulatory revolution, poised to enact comprehensive EU AI laws designed to govern the development and deployment of artificial intelligence. These groundbreaking regulations, often referred to as the AI Act, represent a significant step towards establishing a global standard for AI governance. Understanding the nuances of these EU AI laws is crucial for businesses, researchers, and policymakers alike, as their impact will extend far beyond the borders of the EU.

This article delves into the intricacies of the proposed EU AI laws, exploring their key provisions, potential implications, and the broader context of AI regulation worldwide. We’ll examine the risk-based approach adopted by the EU, the specific requirements for high-risk AI systems, and the potential challenges and opportunities that lie ahead. We will also explore how these EU AI laws are likely to influence other jurisdictions and shape the future of AI innovation globally.

The Genesis of EU AI Laws: A Response to Rapid Technological Advancement

The rapid advancement of artificial intelligence has brought about immense opportunities across various sectors, from healthcare and finance to transportation and education. However, it has also raised concerns about potential risks, including bias, discrimination, privacy violations, and safety hazards. Recognizing the need for a regulatory framework that fosters innovation while mitigating these risks, the European Commission proposed the AI Act in April 2021.

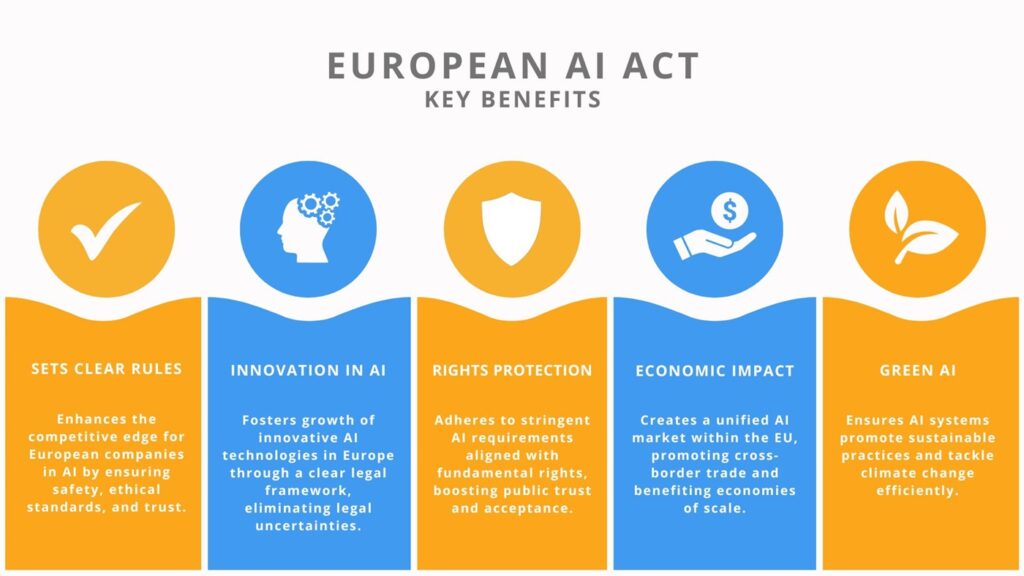

The EU AI laws are not intended to stifle innovation but rather to create a level playing field that promotes trust and responsible AI development. By establishing clear rules and guidelines, the EU aims to ensure that AI systems are safe, reliable, and respect fundamental rights and values.

A Risk-Based Approach: Categorizing AI Systems

At the heart of the EU AI laws is a risk-based approach, which categorizes AI systems based on their potential to cause harm. The higher the risk, the stricter the regulatory requirements. This approach allows for a proportionate and targeted regulation, focusing on the areas where the potential for harm is greatest.

The AI Act identifies four categories of AI systems:

- Unacceptable Risk: AI systems that are considered to pose an unacceptable risk to fundamental rights and safety are prohibited. This includes AI systems that manipulate human behavior, exploit vulnerabilities, or are used for social scoring by governments.

- High Risk: AI systems that are deemed to pose a high risk to people’s health, safety, or fundamental rights are subject to strict requirements. This includes AI systems used in critical infrastructure, education, employment, law enforcement, and healthcare.

- Limited Risk: AI systems that pose a limited risk are subject to transparency obligations. This includes AI systems such as chatbots, which must inform users that they are interacting with an AI system.

- Minimal Risk: AI systems that pose minimal risk are largely unregulated. This includes AI systems used for tasks such as video games or spam filtering.

Requirements for High-Risk AI Systems: Ensuring Safety and Trustworthiness

High-risk AI systems are subject to a comprehensive set of requirements designed to ensure their safety, reliability, and trustworthiness. These requirements include:

- Data Governance: High-risk AI systems must be trained on high-quality, relevant, and unbiased data.

- Technical Documentation: Developers must provide detailed technical documentation that explains how the AI system works and how it meets the requirements of the AI Act.

- Transparency and Explainability: High-risk AI systems must be transparent and explainable, allowing users to understand how they make decisions.

- Human Oversight: High-risk AI systems must be subject to human oversight to prevent errors and ensure that they are used responsibly.

- Accuracy and Robustness: High-risk AI systems must be accurate and robust, able to perform reliably in a variety of conditions.

- Cybersecurity: High-risk AI systems must be protected against cybersecurity threats.

These requirements are designed to minimize the risks associated with high-risk AI systems and to build trust in these technologies. Compliance with these requirements will be essential for companies that develop and deploy high-risk AI systems in the EU.

The Impact of EU AI Laws Beyond Europe

The EU AI laws are expected to have a significant impact beyond the borders of the EU. As the first comprehensive regulatory framework for AI, the AI Act is likely to serve as a model for other countries and regions that are developing their own AI regulations. The “Brussels Effect,” where EU regulations become de facto global standards, is anticipated to play out in the realm of AI governance. Companies that want to operate in the EU market will need to comply with the AI Act, regardless of where they are based. This will incentivize companies worldwide to adopt the principles and requirements of the AI Act, leading to a more harmonized global landscape for AI regulation.

Furthermore, the EU AI laws are likely to influence international discussions on AI governance. The EU is actively engaged in international forums, such as the G7 and the OECD, to promote a common understanding of AI risks and to develop international standards for AI. The AI Act will provide the EU with a strong position in these discussions, allowing it to shape the global agenda for AI regulation.

Challenges and Opportunities: Navigating the New Regulatory Landscape

The implementation of the EU AI laws will present both challenges and opportunities for businesses, researchers, and policymakers.

Challenges:

- Compliance Costs: Complying with the AI Act will require significant investments in data governance, technical documentation, transparency, and human oversight. This could be particularly challenging for small and medium-sized enterprises (SMEs).

- Innovation Barriers: Some fear that the strict requirements of the AI Act could stifle innovation and make it more difficult for European companies to compete with companies from other regions.

- Enforcement: Enforcing the AI Act will be a complex and resource-intensive task. It will require the development of new expertise and the establishment of effective monitoring and enforcement mechanisms.

Opportunities:

- Increased Trust: The AI Act could increase trust in AI systems, leading to greater adoption and acceptance of these technologies.

- Competitive Advantage: Companies that comply with the AI Act could gain a competitive advantage by demonstrating their commitment to responsible AI development.

- Innovation Driver: The AI Act could drive innovation by encouraging the development of safer, more reliable, and more trustworthy AI systems.

The Ongoing Evolution of EU AI Laws

The journey towards comprehensive EU AI laws is an ongoing process. The proposed AI Act is currently undergoing review and amendment by the European Parliament and the Council of the European Union. It is expected to be finalized and enter into force in the coming years. However, even after the AI Act is enacted, the regulatory landscape for AI will continue to evolve as new technologies emerge and new risks are identified.

Staying informed about the latest developments in EU AI laws is essential for businesses, researchers, and policymakers. By understanding the key provisions of the AI Act and the broader context of AI regulation, stakeholders can prepare for the future and ensure that AI is used responsibly and ethically.

Conclusion: Shaping the Future of AI Governance

The EU AI laws represent a bold and ambitious attempt to regulate artificial intelligence and to ensure that it is used for the benefit of society. While the implementation of the AI Act will present challenges, it also offers significant opportunities to build trust in AI, drive innovation, and shape the future of AI governance worldwide. The meticulous approach of the EU, focusing on a risk-based framework and demanding transparency, explainability, and human oversight, positions them as a leader in responsible AI development. As the world watches, the success of these EU AI laws could very well dictate the global trajectory of AI innovation and regulation for decades to come. Companies that adapt and embrace these new standards will be best positioned to thrive in the evolving landscape of artificial intelligence.

[See also: AI Ethics and Responsible Innovation]

[See also: The Future of AI Regulation]

[See also: Impact of AI on the Job Market]