How Do You Break the C.AI Filter: Understanding the Risks and Realities

The question of “how do you break the C.AI filter” has become increasingly prevalent, particularly within online communities interested in pushing the boundaries of AI interaction. C.AI, referring to Character AI, employs filters designed to prevent the generation of harmful, unethical, or inappropriate content. These filters are in place to ensure user safety, maintain ethical standards, and comply with legal regulations. However, the desire to bypass these safeguards persists, raising important questions about the ethics, legality, and potential consequences of such actions.

Understanding the C.AI Filter

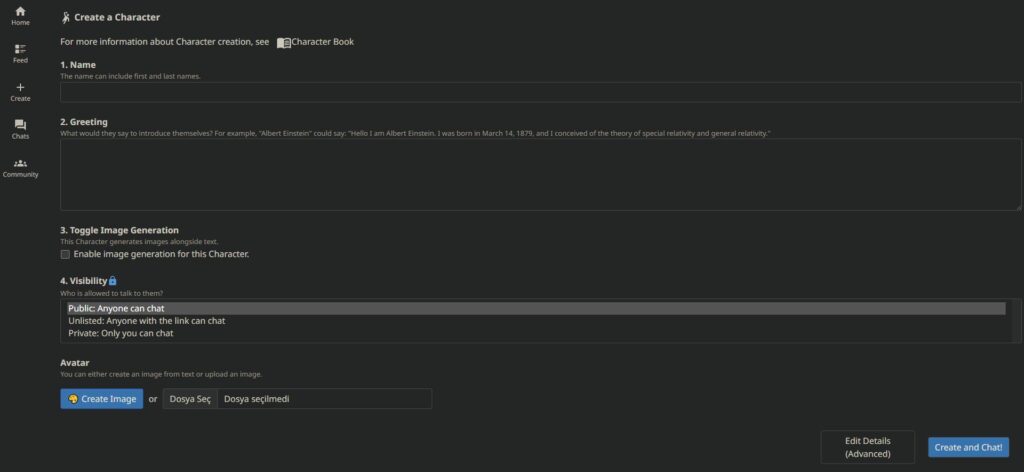

Character AI platforms utilize sophisticated algorithms and natural language processing (NLP) to create interactive, conversational AI characters. These characters are designed to simulate human-like interactions, providing users with engaging and immersive experiences. To prevent misuse and potential harm, C.AI incorporates various filtering mechanisms. These filters work by analyzing input prompts and generated responses, flagging content that violates established guidelines.

The primary goals of these filters include:

- Preventing the generation of sexually explicit or suggestive content.

- Blocking hate speech, discrimination, and offensive language.

- Protecting against the creation of content that promotes violence, illegal activities, or self-harm.

- Ensuring compliance with legal and ethical standards.

The effectiveness of these filters is crucial for maintaining a safe and responsible AI environment. However, users sometimes seek to circumvent these filters, leading to a cat-and-mouse game between developers and those trying to bypass the system. The question of “how do you break the C.AI filter” isn’t just a technical one; it’s deeply intertwined with ethical considerations.

Methods Used to Bypass the Filter

Several techniques have been employed in attempts to break or bypass the C.AI filter. These methods range from simple wordplay to more complex manipulation of prompts and context. Understanding these techniques is essential for both developers seeking to improve filter robustness and users aiming to engage with AI responsibly.

Prompt Engineering

Prompt engineering involves carefully crafting input prompts to elicit desired responses from the AI. This can include using euphemisms, metaphors, or indirect language to suggest topics that would otherwise be blocked by the filter. For example, instead of asking directly about a violent act, a user might describe a scenario that implies violence without explicitly stating it.

Context Manipulation

Context manipulation involves setting up a specific scenario or backstory to influence the AI’s responses. By creating a detailed context, users can sometimes trick the AI into generating content that would normally be filtered out. This technique relies on the AI’s ability to understand and respond to context, which can be exploited to bypass filter restrictions.

Character Persona Exploitation

Some users attempt to exploit the AI’s character persona to generate specific types of content. By prompting the AI to adopt a certain personality or role, users may be able to elicit responses that push the boundaries of the filter. For example, if the AI is programmed to act as a rebellious or mischievous character, it might be more likely to generate content that skirts the edge of acceptability.

Iterative Refinement

Iterative refinement involves repeatedly modifying prompts and analyzing the AI’s responses to gradually refine the interaction. This process allows users to identify loopholes or weaknesses in the filter and exploit them to achieve their desired outcome. It’s a trial-and-error approach that requires patience and persistence.

Risks and Consequences of Bypassing the Filter

While the allure of bypassing the C.AI filter may be strong for some, it’s crucial to recognize the potential risks and consequences associated with such actions. These risks extend beyond mere rule-breaking and can have significant ethical, legal, and personal implications.

Ethical Concerns

Bypassing the filter to generate harmful or inappropriate content raises serious ethical concerns. It undermines the efforts of developers to create safe and responsible AI environments and can contribute to the spread of harmful content online. Engaging in such activities can be seen as morally reprehensible and can damage one’s reputation.

Legal Ramifications

In some cases, bypassing the C.AI filter could have legal ramifications. Generating content that violates laws related to hate speech, defamation, or incitement to violence could lead to legal action. Users should be aware of the legal boundaries and avoid engaging in activities that could result in fines or other penalties. [See also: Legal Implications of AI Content Generation]

Personal Risks

Attempting to break the C.AI filter can also pose personal risks. Engaging with inappropriate or harmful content can have negative psychological effects, leading to anxiety, depression, or other mental health issues. Additionally, online activities can be tracked and monitored, potentially leading to privacy violations or reputational damage. It’s crucial to prioritize personal well-being and avoid engaging in activities that could have harmful consequences.

The Developer’s Perspective: Strengthening the Filter

From the perspective of developers, the ongoing challenge is to strengthen the C.AI filter to prevent bypass attempts and ensure responsible AI usage. This involves a multi-faceted approach that includes improving algorithms, enhancing content moderation, and educating users about responsible AI practices.

Advanced Algorithms

Developers are constantly working to improve the algorithms that power the C.AI filter. This includes using more sophisticated NLP techniques to better understand the nuances of language and context. By incorporating machine learning models that can detect subtle attempts to bypass the filter, developers can significantly enhance its effectiveness.

Content Moderation

Effective content moderation is essential for identifying and removing harmful or inappropriate content generated by AI. This involves both automated systems and human moderators who can review flagged content and take appropriate action. By combining these approaches, developers can ensure that the C.AI environment remains safe and responsible.

User Education

Educating users about responsible AI practices is crucial for promoting ethical behavior and preventing misuse. This includes providing clear guidelines on acceptable content and behavior, as well as educating users about the potential risks and consequences of bypassing the filter. By fostering a culture of responsibility, developers can encourage users to engage with AI in a positive and ethical manner. [See also: Ethical Guidelines for AI Interaction]

The Future of C.AI Filters

The future of C.AI filters will likely involve even more advanced technologies and approaches. As AI continues to evolve, so too will the methods used to bypass its safeguards. Developers will need to stay one step ahead, constantly innovating and adapting to new challenges. This could include incorporating AI-powered content moderation systems, developing more sophisticated algorithms for detecting bypass attempts, and implementing stricter user authentication measures.

Ultimately, the goal is to create a C.AI environment that is both engaging and responsible. This requires a collaborative effort between developers, users, and policymakers to establish clear guidelines, promote ethical behavior, and ensure that AI is used for the benefit of society. The question of “how do you break the C.AI filter” should be replaced with “how can we use C.AI responsibly and ethically?”

Conclusion

The desire to know “how do you break the C.AI filter” highlights a complex interplay between technological curiosity, ethical boundaries, and the pursuit of unrestricted interaction. While the technical aspects of bypassing these filters may be intriguing, it’s crucial to consider the ethical and practical implications. The risks associated with generating harmful or inappropriate content far outweigh any perceived benefits. Instead, focusing on responsible AI usage and promoting ethical behavior is essential for creating a safe and beneficial AI environment. Developers must continue to strengthen filters, while users should prioritize ethical engagement and respect the boundaries designed to protect everyone involved. The future of AI interaction depends on a commitment to responsible innovation and ethical conduct. Understanding the motivations behind wanting to know “how do you break the C.AI filter” is important, but it should lead to discussions about responsible AI use rather than actual attempts to circumvent safeguards. Ultimately, the goal is to foster an environment where AI can be used for good, without compromising safety or ethical standards. The conversation should shift from “how do you break the C.AI filter” to “how do we make C.AI better and safer?”