Decoding Computing Architecture Types: A Comprehensive Guide

In the ever-evolving landscape of technology, understanding computing architecture types is crucial for anyone involved in software development, system administration, or even just navigating the modern digital world. From the smartphones in our pockets to the massive data centers powering the internet, different computing architectures underpin the functionality and efficiency of these systems. This article delves into the diverse world of computing architecture, exploring the various computing architecture types, their characteristics, advantages, and disadvantages.

What is Computing Architecture?

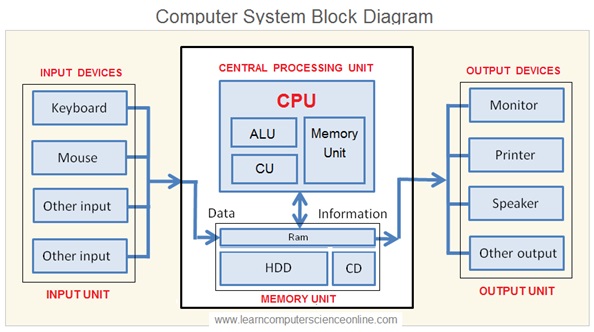

At its core, computing architecture defines the fundamental organization of a computer system. It’s the blueprint that dictates how the hardware and software components interact to process information. Think of it as the structural framework that supports the entire building of computation. This includes aspects such as instruction sets, memory management, input/output (I/O) systems, and interconnections between components. Choosing the right computing architecture is essential for optimizing performance, scalability, and cost-effectiveness.

Key Types of Computing Architectures

Several distinct computing architecture types have emerged over the years, each designed to address specific needs and challenges. Here’s a breakdown of some of the most prevalent:

Von Neumann Architecture

The Von Neumann architecture, named after mathematician John von Neumann, is arguably the most fundamental and widely used computing architecture. It’s characterized by a single address space for both instructions and data, meaning the CPU fetches both from the same memory location. This simplicity makes it relatively easy to implement, but it also leads to a bottleneck known as the “Von Neumann bottleneck,” where the CPU spends time waiting for instructions or data to be fetched from memory. Most personal computers and servers still rely on variations of the Von Neumann architecture.

Harvard Architecture

In contrast to the Von Neumann architecture, the Harvard architecture employs separate memory spaces for instructions and data. This allows the CPU to fetch both instructions and data simultaneously, eliminating the Von Neumann bottleneck and improving performance. Harvard architecture is commonly used in embedded systems, digital signal processing (DSP) applications, and microcontrollers where speed and real-time performance are critical. This is because the parallel access to instruction and data memory is essential for time-sensitive operations.

Parallel Computing Architectures

Parallel computing involves using multiple processors or cores to execute tasks simultaneously. This approach significantly accelerates computation by dividing a problem into smaller parts that can be solved concurrently. There are several variations of parallel computing architecture, including:

- Symmetric Multiprocessing (SMP): SMP systems have multiple processors sharing the same memory space and I/O resources. Each processor has equal access to the system’s resources, making it relatively easy to program. SMP is commonly used in servers and high-performance workstations.

- Massively Parallel Processing (MPP): MPP systems consist of thousands or even millions of processors, each with its own local memory and I/O. Processors communicate with each other through a high-speed network. MPP is typically used for large-scale scientific simulations and data analysis.

- Distributed Computing: Distributed computing involves distributing tasks across multiple computers connected over a network. Each computer works independently on its assigned task, and the results are combined to produce the final output. Cloud computing platforms often employ distributed computing architectures.

Instruction Set Architecture (ISA)

The Instruction Set Architecture (ISA) defines the set of instructions that a processor can understand and execute. It acts as the interface between the hardware and software. Different ISAs have different characteristics, impacting performance, power consumption, and code size. Common ISAs include:

- x86: The x86 ISA is dominant in personal computers and servers. It’s known for its backward compatibility and large software ecosystem. However, it can be complex and power-hungry.

- ARM: The ARM ISA is widely used in mobile devices and embedded systems. It’s known for its energy efficiency and small footprint. ARM’s prevalence continues to grow into servers and other computing domains.

- RISC-V: RISC-V is an open-source ISA gaining popularity. Its modularity and flexibility make it suitable for a wide range of applications, from embedded systems to high-performance computing.

Client-Server Architecture

The client-server computing architecture is a widely used model in networked systems. In this architecture, clients (e.g., web browsers, desktop applications) request services from servers (e.g., web servers, database servers). The server processes the requests and sends the results back to the client. This architecture is highly scalable and allows for centralized management of resources. It is a fundamental computing architecture that underpins many modern applications.

Cloud Computing Architecture

Cloud computing architecture refers to the design and organization of cloud computing systems. It typically involves a combination of virtualization, distributed computing, and service-oriented architecture. Cloud platforms provide on-demand access to computing resources, such as servers, storage, and networking. This allows users to scale their infrastructure up or down as needed, paying only for the resources they consume. This is a rapidly evolving computing architecture and continues to transform how applications are built and deployed.

Microservices Architecture

Microservices architecture is a software development approach where an application is structured as a collection of small, independent services, modeled around a business domain. These services communicate through lightweight mechanisms, often an HTTP resource API. Microservices are independently deployable, scalable, and can be developed in different programming languages. This computing architecture enhances agility and resilience, allowing for faster development cycles and improved fault isolation.

Factors to Consider When Choosing a Computing Architecture

Selecting the appropriate computing architecture involves careful consideration of several factors:

- Performance Requirements: The required processing speed, memory capacity, and I/O throughput are crucial factors. For computationally intensive tasks, parallel computing architectures may be necessary.

- Scalability: The ability to handle increasing workloads and user demands is essential. Cloud computing architectures and microservices are well-suited for scalable applications.

- Cost: The cost of hardware, software, and maintenance should be considered. Energy efficiency is also becoming increasingly important.

- Power Consumption: Power constraints are particularly relevant for mobile devices and embedded systems. ARM computing architecture excels in this area.

- Application Type: The specific requirements of the application will influence the choice of computing architecture. Real-time systems require architectures with low latency and predictable performance.

- Security Requirements: Security considerations are paramount, with some architectures offering enhanced security features.

The Future of Computing Architectures

The field of computing architecture continues to evolve rapidly. Emerging trends include:

- Quantum Computing: Quantum computers leverage the principles of quantum mechanics to perform computations that are impossible for classical computers. [See also: Quantum Computing Explained]

- Neuromorphic Computing: Neuromorphic computing architectures mimic the structure and function of the human brain. They are well-suited for tasks such as image recognition and natural language processing.

- Edge Computing: Edge computing involves processing data closer to the source, reducing latency and improving responsiveness. [See also: Edge Computing Applications]

- Heterogeneous Computing: Heterogeneous computing architectures combine different types of processors, such as CPUs, GPUs, and FPGAs, to optimize performance for specific workloads.

Conclusion

Understanding the various computing architecture types is essential for building efficient, scalable, and cost-effective systems. From the foundational Von Neumann architecture to the cutting-edge advancements in quantum and neuromorphic computing, the choice of computing architecture significantly impacts performance and capabilities. By carefully considering the factors outlined above, developers and system architects can select the most appropriate computing architecture for their specific needs. As technology continues to advance, new and innovative computing architectures will undoubtedly emerge, shaping the future of computation.